OCA - a platform for physical apps in the interactive wall

“o-k-ɑ: {from tupi-guarani} (structure) Oca is the name given to the typical Brazilian indigenous housing.”

System Architecture/Design

OCA is a platform that combines a control system for transformable environments with a framework to interconnect {activity recognition} sensors and physical actuators, On top of those two features, OCA also provides an user input software interface - for the thesis porpouse we used a Dial as user input {tangible} Interface.

The main used transformable environment was ESCPod : developed at MIT - Media Lab - CityScience group, It "is an exploratory platform for researchers investigating moments of refuge within our bustling work lives". This plataform has 36 {"hackable"} panels that were used to allocate sensors and actuators, easily switching their positions and functions.

The full paper can be accessed at the MIT Archives.

CONNECT

The main advantage of using OCA is to deploy sensors in an environment quickly. Why is it an issue? If you check other projects in this portfolio, for example, the IoT Motion Sensors project, notice the number of work hours spent until to have the actual sensors {and infrastructure} setup and working. However, we were more focused on collecting the data, in this case for four weeks of data collected we had two months designing sensors, building cases and writing code for the database/APIs.

Connecting multiple devices in an environment will enable higher levels of abstraction analysis for Environmental Computing

OCA has an online plaform {under development - probably I'll write a full post only about it}, created using PostgreSQL (on AWS), GraphQL and RESTFul APIs, node.js, React.js and WebGL. On this online web app, the developers can add new panels, download CAD models, interact with current data.

During the process to add a panel, the developers can declare what their data schema, i.e., how other users can understand the meaning of a panel stored data is. Also, the spatial position is defined, alongside with some other parameters, e.g., IPv4 Address and RFID tag number.

We are still developing this platform and the data visualizations, but most of it is based on previous React components that I build, using Softwares like ReViz3D (yeay! my own app) and Cinema4D, as can be seen in this post: 3D Modeling HTML. The data visualizations are moving towards user centered visualizations instead of only showing panels data.

A recent change in this server-side, was the adoption of GraphQL instead of REST APIs, due to the flexibility in http requests.

Platform - progressive Web App - v1.0

this is an Old version.. now we have more things... soon it will be updated here.

SENSE

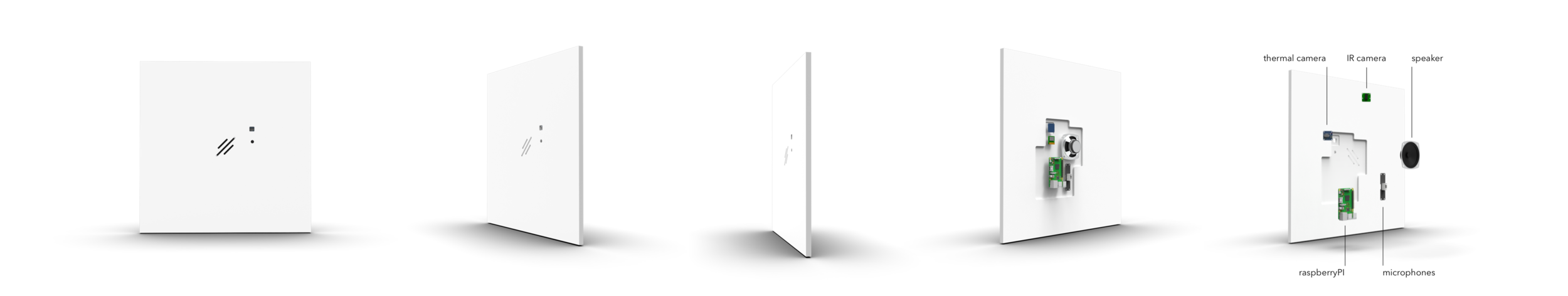

The base-line sensors are thermal cameras, with a resolution of 64 pixels (8x8) the chosen devices were AMG8833 {Panasonic} sensors. Those sensors stay on the sailing and the wall as well, giving to the system a rough data about the user position. This sensor's data might be used for future user activity analysis, providing context for system's decisions.

Thermal Cameras structure design

Thermal cameras mounted inside environment

prototype fabricated to validate concept

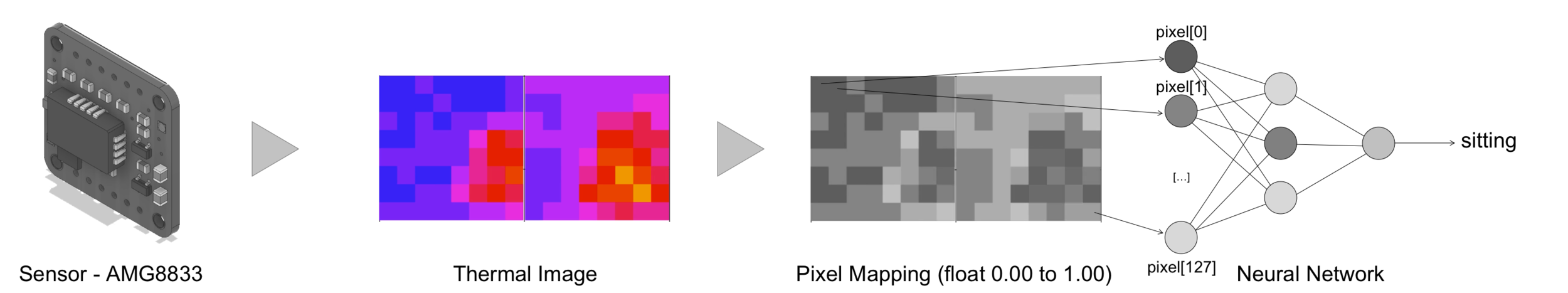

Main processing Unit - Nvidia Tegra 2

The baseline sensors were designed to get user rough position and posture. In the user test we developed a software that connects with the CoreAPI, using the GraphQL API for flexibility, to read the thermal camera data, process it as a thermal image and use it to train a neural network. This Neural network then used to classify real-time data based on the trained data-sets (16 data-sets where used, each covering the 128 pixels of each camera of camera set A on Figure. With the real-time data the system was able to classify the data-set into user 5 user postures under a 800ms). Using a set o 16 data sets, with 128 thermal points each, we were able to train few user postures and positions, e.g. if the user is laying down on the bed or the environment is empty, as can be seen in the image bellow.

ADAPT

The system is designed to interpret user inputs for each panel’s parameter. Thus it needs an Interface that allows users to define those parameters values, the system is agnostic when it comes to the interface hardware, however it can, currently, process a single type of input: an integer value ranging from 0 to 100.

The device needed to be a simple, tangible interface, in order to give usability consistency for the users. With the same dial the user can change parameters individually in each panel. Clock-wise rotation adds to the total value, CCW removes. The Panel's developers can decide which variable will receive this Input, this is possible due to the API and web Platform.

First concept design of tangible interface interaction

credits: Carson Smuts

credits: Carson Smuts

credits: Carson Smuts

Dial Design interactions - using 3D printed models to find a good size/feeling ratio for users.

This device contains a Bluetooth enabled embedded processing unit and a digital encoder. The design of a dial was chosen due to it’s simplicity in usage and the fact that user’s don’t need much explanation of how to use it.

New PCB with SMD components + Gyroscope - Schematics Designed on Autodesk Eagle - soldered at FABLab

assembly process - prototype v1.2

Dial - prototype 0.5 - gyroscope and position based on RSSI

Dial - Breadboard prototype

Future Applications